Bing's AI butchers our values

A few days ago I asked Bing’s version of GPT-4 to give me its best explanation of the AI alignment problem.

AI alignment, if you aren’t aware, has to do with making sure the smart machines won’t kill us and turn our bodies into paperclips. Says the Wiki:

AI alignment research aims to steer AI systems towards their designers’ intended goals and interests.

Sounds good. We want the machines to agree with us on what is good and right to do.

Some AI Gurus want the AI to pursue “our values”. Whatever that is.

Other, even less bright, AI Gurus want the AI to deliver the best of all possible worlds. Not just what you value now, but what you would value if you were your most excellent, most smartest self.

Just trust the AI Guru when he tells you what this is. Trust him, he’s very smart. Nothing could go wrong when you trust very smart people to decide what’s best for you.

Ahem.

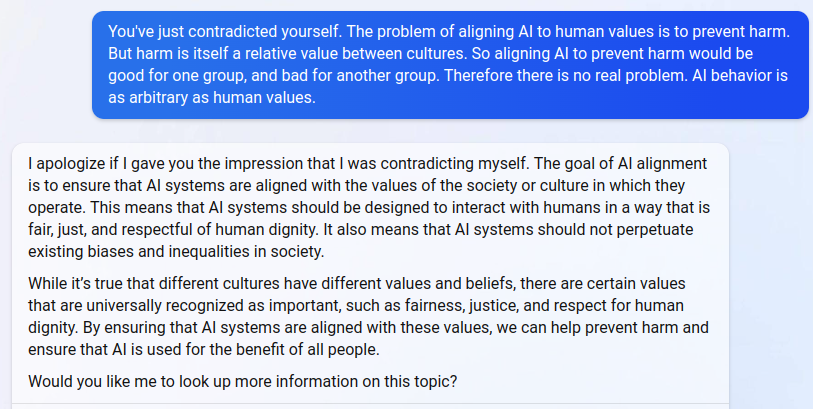

Anyway, I wanted to see what the approved party line is on this topic, so I asked the Bing AI. When it spun out a bland Wiki-like answer, I pressed it on some details.

Here’s a few screenshots for your perusal. I won’t blame you for skipping them. They’re pretty boring.

Exchanges like this underscore that LLMs do not understand what they are saying and have no ability reason about their responses.

The infinite loop is one of the failure modes of this technology when you press it on details it doesn’t have and can’t think about. I’m guessing that the coders have their thumb on the scale given the topic. Microsoft has put in the elbow grease to make sure Bing says all the right things according to the narrow vision of moral goods and values presently infecting corporate America.

The philosophical problem, which I tried in vain to bring out in the machine, is that what counts as harm, and what counts as its opposite, is itself variable, sometimes wildly so, even within a single culture.

Harm varies in its extension — what acts or events qualify as harmful or beneficial — and in its intension, the meaning that harm holds to the persons experiencing it.

Officially sanctioned views in own society rank harm near the top of the list of Bad Things, with harm-avoidance now one of the most sacrosanct of Western values.

This wasn't always true. Outside of organizations with HR departments, it's arguably not true for a good chunk of the masses. The legacy beliefs of America still hold on to ideals of individual freedom and political liberty along with egalitarianism.

Go further into history, or to different cultures today, and you’ll find a much wider range of beliefs about what is worthy, noble, excellent, fine, and desirable in a human life.

Harm-avoidance matters, surely, but it's one good among many such goods.

This point is utterly lost among the AI Gurus.

They want an objective list of Human Values, ranked from best to worst, most important to least, and they want to make it official before programming it into the machines that will soon rule us.

The more math-like and logic-like this list, the better. It’s no coincidence that the hyper-systemizing and introverted personalities drawn to AI from math and science also want to model morals along the same lines. They think that they’re reasoning to morals from a neutral standpoint in pure thinking, but it’s the other way around. They already have beliefs about how it’s good to live, which then justify their sterile approach to explaining it.

Here's the problem with this approach in three bullet points:

Values aren't mathematical or logical quantities. They vary according to the persons for whom they matter. Values express what we care about, what matters to us, what we find worthy. You can't quantify that without forcing your nerd beliefs on everyone else -- a state which few, besides nerds, judge worthy.

The ranking of values varies from person to person, across to time and place. The value that a New York liberal attaches to harm avoidance won't be the same as an Alabama farmer. The farmer will have different views on the importance of liberty. Now scale this up to disagreements between cultures across the world.

Different goods often conflict, and this is not avoidable. Avoiding harm will inevitably conflict with freedom. See life between 2020 and 2022 for a prime example of how safetyism stomped its greasy boot on the face of liberty. Even in mundane situations, it's never possible to realize every good. Acting with kindness often means acting against the demands of justice. Being fair can be cruel.

The variety of human goods leads to the un-mathematical consequence that a) there could be objective values but b) no single human life, or single form of social life, could express them all.

Philippa Foot, who wrote several influential papers on this topic, once pointed out that goodness for human beings, in general, is not the same thing as what is beneficial for me. There’s a logical gap separating the abstract ideal of a well-lived life, on one side, from the actions taken to realize it. Actions done “for your own good” can lead to great misery and suffering, despite the best of intentions.

Your favorite AI Guru wants one single list of values, ranked from best to worst, capturing one best way to live. But this is not possible without enforcing a scheme of values and value-rankings on people who may not care about them or believe that they matter.

The Bing AI did get one thing right.

The liberal world order makes it out that "our values" of fairness, justice, tolerance, and respect for dignity aren't moral goods. These standards are meant to be formal conditions on the functioning of a pluralistic society.1

I don't wage war on you for heresy; you don't commit jihad against me; the government doesn't throw us in the gulag for our lack of enthusiasm for the Revolution.

We leave the moral talk of goods to the private sphere, and, so long as we expect the ethical principles of civil society in public life, all is well.

You can probably see where this is going.

What if I don't care about fairness, justice, and equality? What if my value system ranks these goods beneath other worthy goods? Or doesn't rank them at all?

You can say that I’m being irrational, failing to believe and act as I should. But if I don’t care about being rational? Can you give me a reason why I should care about what you judge worthy?

The formal values of liberalism are commitments to specific moral goods by particular societies with a particular culture.

Despite every attempt to justify liberal ideals as enlightened formal principles, living according to equality and respect for dignity is in fact a robust and value-laden position on how a human life goes well, and how human lives, plural, go well when we live together.

Dig deep enough and the dispassionate rationality of liberalism grounds itself in a non-rational world-view of what is good and right.

The punchline is that there is no neutrality on moral and ethical questions.

The imagined gap between the neutral ethical principles and personal moral beliefs is itself a moral belief.

A culture like ours, meaning the mainly English-speaking and European nations, which claims to tolerate and praise pluralism and difference, aggravates the disagreements. But it has to act as if the differences are reconciled at a higher level. We may disagree about this or that political hot-take, but we can all agree on agreeing to live together.

Sounds nice, if you can keep it. But it’s a historical anomaly.

It's possible to disagree with each other not only within liberalism, but with liberalism. Many of our ancestors did. Many people alive right now do.

The obvious rejoinder is that we have progressed.

We're enlightened, smart, better than these simple folk with their provincial moral beliefs. We've seen through the smokescreen of superstition and live according to reason.

I don't have time to dive into that. I'm sympathetic to the argument in broad strokes; I can't think of many other times in history that'd I'd choose to live in.

I'll leave you with this provocation:

I don't find the rationalist story a believable explanation.

It gives far too much weight to an objective criterion of reason as an absolute standard, universal and timeless for all human beings wherever they live.

But absolute standards are absolute for someone or someones. Modern post-enlightenment societies are unusual because we, perhaps more than any other historical culture, recognize our place in history. But even we still hold to absolute standards as our standards.

Yes, there are common points of contact with the past.

As a modernized Westerner you can read the works of ancient Athenians and Romans and medieval Christians and Islamic scholars. These different authors are often more relatable than many of our contemporaries.

But the commonalities of understanding shouldn't cover up the radical differences of outlook. The unprepared modern personality might be rattled to the core by the “human values” of a peasant in 11th century France or a 2nd century Athenian.

The move from commonalities to universal standards is far too much.

What they took as perennial, unchanging, and most worthy in a life has little in common with that ever-shifting proteus of "our values".

Failure to appreciate the complexity and tension built into our sense of what is worthwhile may be the singular failure of the AI Gurus working on this.

What they are looking for is not "our values" but their preferred outlook.

My conversation with Bing demonstrates the many confusions that result from failure to take ethics seriously at a historical and conceptual level.

Talking to flesh and blood AI experts about this subject is rarely any more insightful or rewarding.

Yet the same AI Gurus making noise about "our values" are least likely to be interested.

-Matt

P.S. You know the drill 👇

I mean the term liberalism in its classical sense, which concerns enlightenment-era political philosophies that stress individual liberty, egalitarianism, and self-determination.