The generative AI apocalypse turned out different

Instead of HK tanks and T-800 exoskeletons marching across a sea of human skulls, we got static drowning all meaning an infinite white noise

What happens when the snake eats its own tail?

You're living it, that's what.

This result was entirely predictable, by the way, for reasons I'll get to at the end of this article.

Here's the basic form of the problem.

AI-driven algorithms show you more and more and more of less and less and less.

They optimize for what you've already liked, consumed, wanted, or shown the slightest interest in.

The more humans respond to optimized choices, the more optimized choices they get.

That statement generalizes across scales. The arms race happening between you and your Netflix recommendations is a microcosm of the economic macrocosm pressuring every industry to roll out "AI".

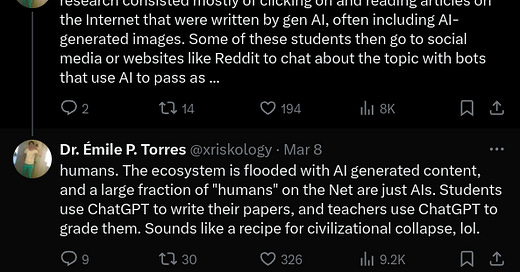

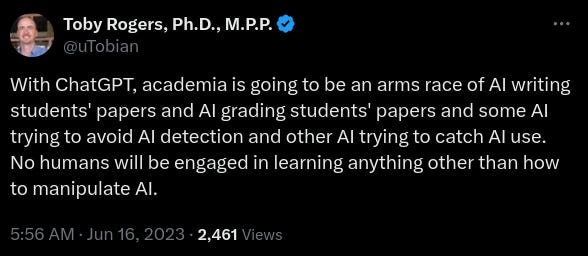

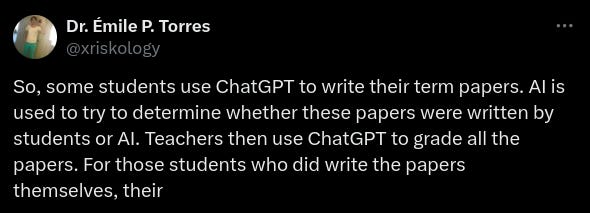

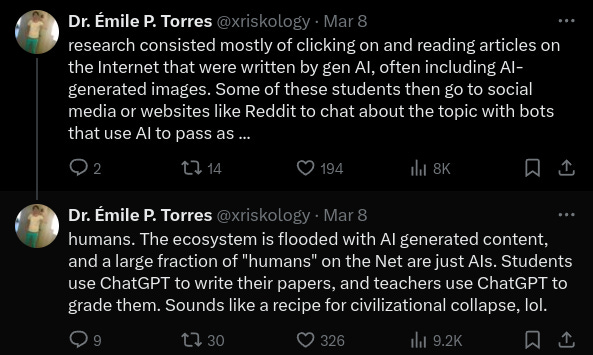

The tale in these tweets shows us the consequences of a civilization optimizing for optimization.

Pretty soon the pattern interrupt becomes the pattern.

That's been the state of things in mass culture with instant feedback and zero marginal cost of production. A new thing appears, creating waves, which leads to copycats, which dulls the novelty, and then it's on to the next thing.

These LLM tools force each cycle through the loop on to a tighter path than the last round.

There's less novelty, more similarity.

Repeat the cycle enough times and you've hyper-focused your choices and desires down a pathway of atomic thickness.

If the feedback loop continues, we're going to see exponential growth of similar options within an exponential contraction of the range of available choices.

AI's leading us to the simulation of depth and breadth while the ecology of real choices gets shallower by the second.

Have you ever been in the room when somebody takes a microphone and holds it too close to the speaker?

That shrieking, echoing, ear-splitting noise is called "feedback".

Feedback happens when the input into a system receives the system's output, which adds to the original input and then feeds back into the system.

It's a self-amplifying loop. Each trip through the system gets larger.

In mathematics this leads off to infinities. In the real world, fortunately, nature doesn't care about math, so material limits prevent the speaker from exploding. But it sure would love to explode if it knew the math.

This is where we are with generative AI right now.

The internet as you know it is turning into a shrieking wall of noise with the volume dial slowly easing up. If it continues, you won't be able to hear conversations because the internet will be the equivalent of an ear-bleeding background hiss drowning out any meaningful signal.

I don't think it will get that bad, for a couple reasons.

One, the tech itself is turning out lukewarm at best.

The more we feed human responses into the AI, the dumber and more incoherent they become.

The funny part is that the latest models are now training on data-sets infected by AI-produced works.

AI's learning from data that was already processed through AI.

Back in the days when we still had real copy machines, you learned a neat lesson when you made a copy, and then made a copy of the copy.

Do it too many times and you end up with unreadable blots on the page.

A copy is never perfect. Each copy of the copy magnifies the flaws.

AI's now optimizing for stupid AI content.

Two, I don't care about the internet as you know it.

The five or six dominant social platforms, the search engines, the ecology of "the web" that adapted itself, evolution-like, to suit the needs of five apex companies... I don't care.

If AI floods that ponzi scheme with so much "content" that it becomes about as useful as a beer with a cigarette butt, I'm okay with that. More than okay.

It means that the current model of doing things online has found its breaking point, and it will either adapt or die.

As I write this, I'm kinda glad for AI. I've been waiting for the over-centralized media-driven internet scheme to break up or fall apart since at least 2012.

If GPT or whatever ends up destroying social media and search engines as they've existed since ~2010, I'll buy it something nice.

Three, you can't expect any process to continue on in a straight line forever.

That's a general piece of wisdom about life, nature, and reality.

Without fail every predictor, from the optimists to the doomers, looks at the state of things Right Now and sees the future at the other end of a straight line.

That's dumb.

You know what happens when the shriek of feedback gets too loud and uncomfortable?

Everybody picks up and leaves the room.

Incentives change as conditions change. Every change changes the conditions for change.

The more perceptive commentators understand this. The shape of history is not a straight line but a series of discontinuities.

The last 18 months of AI [sic] hype made this clear beyond doubt.

Any self-amplifying feedback loop will sooner or later encounter hard limits. Speakers don't explode when you hold the mic next to them because their physical parts and available energy operate by different laws.

AI looks scary because most people, and I even mean the people that should know better, treat it as a scary demon with supernatural properties.

You think AI doesn't have hard physical limits? You'd be mighty interested to know what it costs to keep these things online. It's easy to think of algorithms as digital illusions, but they exist in real hardware with real electricity bills.

Our would-be oracles don't think this way because it requires imagination instead of typing engagement-bait.

Second, and this is the bigger deal, higher-order changes are not obvious. Some fall into the category of Unknown Unknowns. You can't predict what you can't predict, and that's the nature of history.

I've written here many times that we misunderstand AI's true threat. The machine isn't going to "wake up" one day, possessing a fully-formed mature human consciousness, and start killing everything.

AI isn't becoming more like a human person.

AI is the ultimate attack on the human-friendly anthropocentric universe.

That means a lot of things, but the main point is that AI is nothing without us -- just as many humans now see themselves as lesser or nothing without AI.

Threats from AI appear at every place where AI interfaces with human beings.

What do you think? Leave a comment and let me know 👇

Thanks for reading.

Matt

I'm honestly surprised to see that teachers might use AI to catch students who use AI. Why don't they just require sources for data and look up the sources to make sure they're legit? Oh, I guess that takes time. Our teachers today may be lazy. I don't understand why anyone would use AI as a "source" for any information. I have never seen a person say that AI should be treated as a source. If teachers didn't allow it (and checked sources) then this wouldn't be a big thing for them.

I don't see how anyone could use it as a source. I enjoy using it for creative prompting and images, but would never trust it as a foundation for a paper or argument. If people understood what AI is they wouldn't either. Why aren't we teaching that?

We've seen the feedback loop in social media for a while. That's an interesting way to describe it. I think it's led to a lot of fringe beliefs looking like popular opinions like how Bud light sponsored Dylan M. There are real world responses that should self-correct the system. I'm just not sure how long it will take.